Avro vs. Protobuf: Choosing the Superior Data Serialization Method for Kafka and High-Throughput Systems

Should You Use Protobuf or Avro for the Most Efficient Data Serialization?

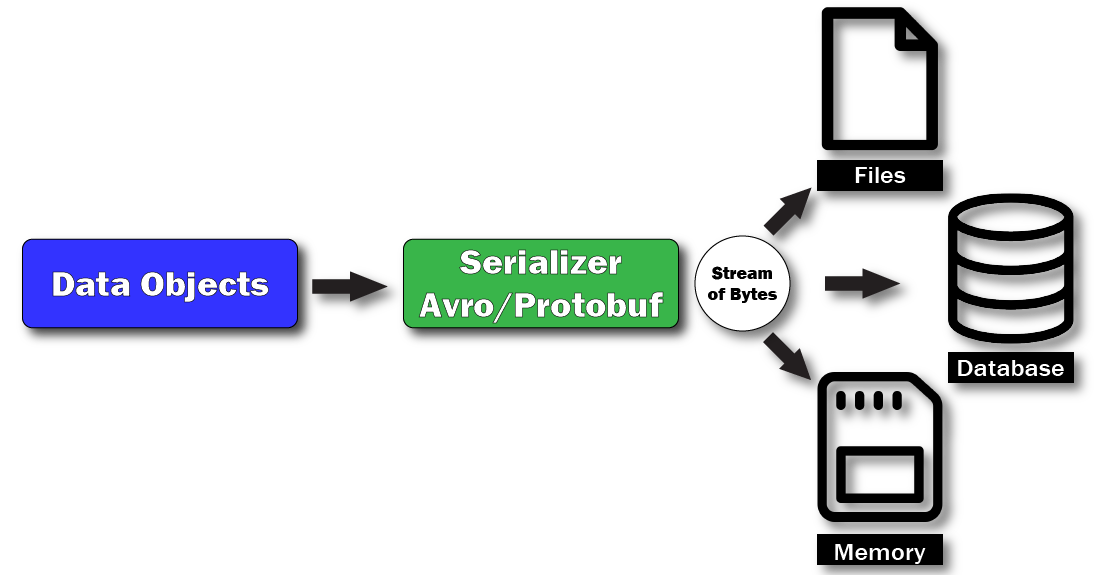

Data serialization plays a crucial role in modern distributed systems, enabling effective communication and storage of structured data. Two widely used serialization methods in the industry are Avro and Protobuf (Protocol Buffers). This article will focus on both Avro and Protobuf, highlighting the distinct advantages of both serialization methods and why one method stands out, especially with systems like Kafka.

Avro

Avro is a serialization framework designed to be language-agnostic, relying on schemas to define data structures for serialization and deserialization. By relying on schemas to define data structures, Avro enables compatibility and flexibility between systems regardless of the languages they are implemented in.

The framework offers dynamic typing, enables data types to evolve over time without breaking compatibility making it easier to accommodate data schemas in applications. Integration with languages such as Python or Ruby allow developers to utilize their existing tools and expertise to help the development cycle move more efficiently. Avro also provides self-describing messages embedding schema information in serialized data for decoding without the original schema.

When it comes to working with Kafka, Avro functions well by offering efficient and schema-aware serialization. Thanks to Avro’s self-describing nature, Kafka consumers can decode data without separate schema files. It also supports schema evolution, enabling compatibility between producers and consumers as schemas change over time.

Protobuf

Protobuf has some similarities to Avro. It is also language-agnostic and uses schemas to define data structures. However, Protobuf employs static typing and code generation for data serialization and deserialization. This approach requires compiling the schema into language-specific classes or libraries using the protoc compiler.

Protobuf’s key strengths are in its high-performance serialization and deserialization capabilities, coupled with a compact binary representation of data. This feature is especially valuable in applications that demand high throughput, such as real-time event streaming systems like Kafka. With static typing and code generation, Protobuf provides pre-defined message structures compiled into language-specific classes or libraries, ensuring better type safety and improved performance. There is also extensive compatibility with various programming languages and platforms, making it an excellent choice for applications with diverse technology stacks.

Challenges with Protobuf: Code Generation and Binary Format

While code generation may initially seem like an extra step in the development workflow, it brings significant benefits in terms of type safety and performance. By generating strongly typed classes in your preferred language, Protobuf reduces the likelihood of errors and enhances compatibility with language-specific features. The inconvenience of code generation is minimal over time, as it can be seamlessly integrated into the build pipeline.

Protobuf’s binary format, although less human-readable than Avro’s, prioritizes efficient machine processing rather than human readability. In high-throughput, real-time systems like Kafka, this compact and efficient binary format enables faster computer processing, a critical advantage for handling large volumes of data. Additionally, there are tools available that decode and visualize messages, addressing any concerns about readability during debugging or development.

The Winner?

While Avro may be suitable for applications that prioritize schema flexibility and human-readability, Protobuf emerges as the clear winner in terms of performance, type safety, and cross-platform compatibility. Its high-performance serialization, compact binary format, and extensive language support make it the preferred choice for data serialization in Kafka.

Are you considering utilizing Protobuf with Kafka? With Infrared360, our solution for simplifying Kafka administration and monitoring, you can use any Protobuf template to display message content within Kafka. You can define the structure or format of the message content, and Infrared360 allows you to view the message content according to that specific Protobuf template within the Kafka environment.

To explore how Infrared360 can assist you in maximizing the benefits of Protobuf and Kafka, we invite you to schedule a conversation with us. Click here to select a time for further discussion.

More Infrared360® Resources