The Complete Guide to IT Infrastructure Monitoring: Enhancing Performance and Security Across Your Systems

Introduction to IT Infrastructure Monitoring

This guide explores the essential components of IT infrastructure monitoring, detailing their significance and how to implement these practices effectively to enhance system performance and security. Since IT environments are central to business operations, proactive management, anticipation, and response to potential issues are indispensable.

Effective monitoring prevents costly downtime and supports scalable growth and technological innovation, making it crucial for IT professionals. This guide offers actionable insights and strategies to strengthen your IT systems, enhance security, boost operational efficiency, and prepare your infrastructure for future challenges. Whether you’re an IT manager or a technical specialist, understanding these components will empower you to make informed decisions that drive organizational success.

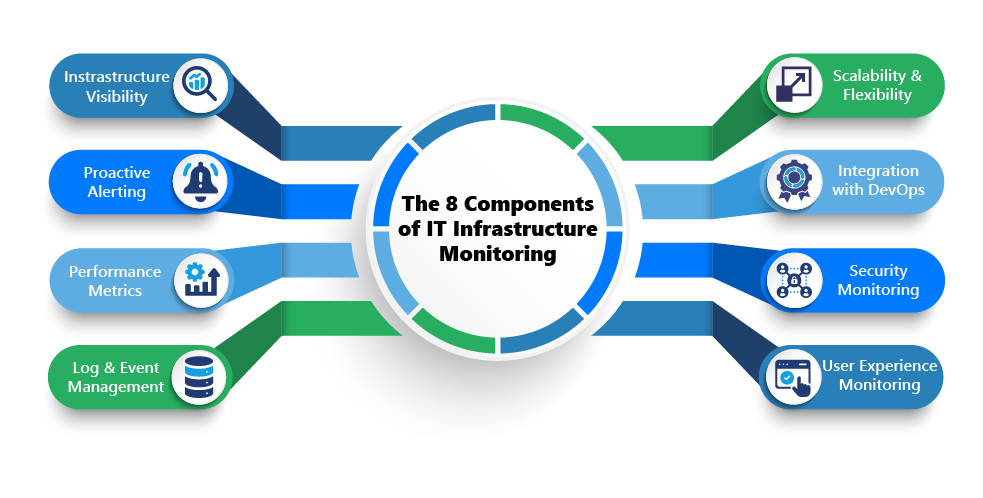

Critical Components of IT Infrastructure Monitoring

Effective IT infrastructure monitoring ensures system reliability, efficiency, and security. Each component is vital in maintaining high performance and adapting to the evolving demands of modern IT environments.

- Infrastructure Visibility: Achieving comprehensive visibility across all IT infrastructure—servers, networks, databases, and applications—is essential. IT teams can quickly identify and resolve performance bottlenecks and security vulnerabilities, ensuring smooth and secure operations.

- Proactive Alerting and Notification: This system is crucial for notifying IT staff about anomalies as they occur, enabling rapid responses to prevent potential downtime. For instance, configuring alerts for disk usage thresholds ensures that IT teams can proactively manage resources before system performance is compromised.

- Performance Metrics and Analytics: Continuous monitoring of key performance indicators such as system uptime, response times, and transaction volumes is essential for data-driven management. Analytics tools predict potential issues, allowing for preemptive adjustments to avert system failures.

- Log and Event Management: While logs provide valuable historical data to diagnose problems and ensure security compliance, they are inherently reactive. The effective collection, analysis, and correlation of log data from diverse sources within the IT environment support root cause analysis and troubleshooting. Still, logs also have the drawback of not providing real-time insights, which can be critical for immediate decision-making.

- Scalability and Flexibility: Monitoring solutions must be scalable to handle business growth and flexible enough to integrate new technologies seamlessly. A scalable monitoring system dynamically adjusts its capacity based on transaction volumes to ensure consistent performance during peak periods.

- Integration with DevOps Practices: Integrating monitoring tools with DevOps practices enhances collaboration and operational efficiency. This supports continuous deployment cycles with real-time monitoring data, facilitating automated processes such as code deployment where critical alerts can initiate rollbacks.

- Security Monitoring: Continuous monitoring for suspicious activities is essential for protecting against data breaches and ensuring compliance. Role-based access controls and automated alerts for unusual access attempts significantly enhance security responsiveness and internal compliance.

- User Experience Monitoring: Monitoring from the user’s perspective ensures applications meet performance standards. Insights into issues like slow load times or transaction bottlenecks enable IT teams to make necessary optimizations, enhancing user satisfaction and system usability.

Each of these components is integral to a strong IT monitoring strategy, ensuring organizations meet current operational demands and are well-prepared for future challenges. This comprehensive approach is crucial for maintaining high system availability, security, and performance as IT landscapes evolve.

Infrastructure Visibility

Comprehensive infrastructure visibility ensures optimal performance and security across key IT components such as servers, networks, databases, and applications. This visibility allows IT managers to proactively detect and resolve issues, enhancing system stability and performance. Monitoring encompasses several aspects:

- Server Monitoring: Assessing server health, usage statistics, and performance metrics to prevent failures.

- Network Monitoring: Tracking data flow, usage, and potential bottlenecks to ensure smooth network operations. Implementing SNMP-based tools can offer real-time insights into network traffic and latency, helping to manage network loads effectively.

- Database Management: Ensuring that databases are optimized, accessible, and secure to support critical business operations.

- Application Performance: Observing application performance to ensure it meets user expectations and operational requirements. Application Performance Monitoring (APM) solutions are vital for identifying and resolving issues that affect user interactions.

Middleware Monitoring Challenges

Despite its critical role in facilitating communication between different applications and services, middleware monitoring faces unique challenges. Middleware components like application servers, message queues, and service buses are pivotal in integrating and managing data flow across systems. However, challenges arise from the complexity of middleware environments, the diversity of middleware solutions, and often limited visibility provided by conventional monitoring tools.

Effective middleware monitoring requires a clear view of all interactions and transactions within these systems. It involves:

- Transaction Tracking: Monitoring the throughput and performance of message queues to ensure data flows seamlessly between services without bottlenecks.

- Error Rate Monitoring: Keeping tabs on error rates and latency to quickly address and rectify any issues that could disrupt business operations.

To tackle these challenges, it’s advisable to employ dedicated middleware monitoring tools designed to handle specific requirements of diverse IT environments. Setting up customized alerts for unusual patterns in message throughput and error rates can help maintain system integrity and prevent issues from escalating.

By ensuring comprehensive visibility and proactive monitoring across all layers of IT infrastructure, organizations can significantly enhance their operational efficiency and prevent downtime, thereby supporting continuous business growth and technological advancement

Proactive Alerting and Notification

Proactive alerting systems are essential for notifying IT staff about potential issues before they escalate into significant problems. Customization and granularity are key components of an effective alerting strategy tailored to meet specific organizational needs and IT environments.

Customizing Alert Systems

Customizing alert systems to reflect critical metrics and operational thresholds relevant to your infrastructure minimizes noise and reduces alert fatigue among IT teams. For instance:

- CPU Usage Alerts: Setting thresholds for CPU usage that prompt immediate alerts when exceeded.

- Memory Thresholds: Alerts for memory capacity reaching critical levels to prevent system overloads.

To refine your alerting strategy, consider implementing:

- Multi-Level Thresholds: Initial warnings prompt preemptive actions, escalating to more urgent alerts if issues are unresolved.

- Adaptable Parameters: Ensure your system can adjust its alert parameters as your infrastructure evolves and new applications are deployed.

Real-time Alerting Systems

Implementing real-time alerting systems is crucial for minimizing response times to potential disruptions, thus reducing downtime and lessening the impact on end-users.

Challenges of Conventional ITIM Solutions

Below is a table illustrating the latency issues often encountered with conventional ITIM solutions that rely on agent-based log data monitoring, showing average CPU usage over time:

| Recording in Log (%) | 40% | 40% | 40% | 60% | 80% | 85% | 90% |

| Average (%) | 40% | 40% | 40% | 45% | 52% | 58% | 62% |

This logging methodology can lead to the following:

- Inaccurate or Delayed Responses: Alerts may not activate until CPU usage reaches much higher than the set thresholds due to the averaging of log data.

- Loss of Granularity: Inability to capture detailed trends and minute fluctuations in CPU usage.

- Increased Risk of False Positives: Averaging may obscure actual values, increasing the likelihood of alerts triggered by exceptional CPU spikes rather than consistent patterns.

Integration with Log Management

As we will explore in the “Log and Event Management” section, while real-time alerting provides immediate incident response, integrating these systems with log management strategies offers comprehensive insights for immediate and long-term operational strategy. This integration enhances the overall monitoring framework by combining the immediacy of real-time alerting with the detailed historical context provided by logs, forming a robust approach to IT infrastructure management.

By focusing on customization to meet specific needs and investing in technologies that support real-time responsiveness, IT infrastructures can adopt a proactive approach that prevents manageable issues from evolving into critical disruptions. This proactive alerting framework is essential for maintaining system integrity and operational continuity. Integrating real-time monitoring systems ensures immediate detection and quick action, effectively addressing the challenges highlighted in the table.

Performance Metrics and Analytics

Monitoring performance metrics is crucial for maintaining the reliability and efficiency of IT systems. These metrics act as system health and performance indicators, providing essential insights into timely decision-making and problem resolution.

Key Performance Indicators

Key performance metrics include:

- Server Uptime: Measures the continuous operation time of servers, indicating stability.

- Network Latency: Tracks delays in data transmission over the network, impacting user experience.

- Application Response Times: Assesses how quickly applications respond to user requests, which is crucial for user satisfaction.

- Resource Utilization Rates: Monitors CPU and memory usage to prevent overloads that can cause system slowdowns or crashes.

IT teams can proactively detect emerging patterns and address potential issues by continuously monitoring these metrics. For businesses with specific needs, such as e-commerce platforms, metrics like web server response time and transaction completion rate are particularly critical. Utilizing tools with customizable dashboards and real-time data visualization can significantly enhance monitoring effectiveness, allowing for swift adjustments based on the latest data.

Leveraging Advanced Analytics

Advanced analytics in IT monitoring are pivotal for proactive decision-making and capacity planning. These analytics involve:

- Predictive Analytics: Using historical data to forecast potential system failures or performance bottlenecks.

- Machine Learning Algorithms: Automating data analysis to enhance predictive accuracy and operational efficiency.

This proactive approach minimizes downtime and supports strategic resource allocation and infrastructure planning. Implementing advanced analytics necessitates integrating sophisticated tools capable of handling extensive datasets and complex computations. These tools should integrate with monitoring systems to enhance data accuracy and utility.

For instance, predictive models can simulate various scenarios and their impacts on system performance, equipping IT managers with the information to make decisions regarding system upgrades or adjustments.

Organizations can effectively leverage performance metrics and advanced analytics to ensure their IT systems are reliable and primed for future growth and evolving challenges. This strategic use of data enhances current operations and prepares systems for future demands, securing technological resilience.

Log and Event Management

While effective log management is a component of IT infrastructure monitoring, it should not be the sole focus due to inherent limitations in dealing solely with historical data. Relying exclusively on logs can lead to delayed responses to issues, as logs typically record events after they have occurred. This section discusses optimizing log management while emphasizing its role as part of a broader, proactive monitoring strategy.

Log Aggregation and Analysis

Log aggregation and analysis, though necessary, are inherently reactive:

- Delayed Insights: Logs provide data after events have transpired, hindering the ability to address real-time issues.

- Complex Data Integration: While centralizing data from servers, applications, and network devices offers a comprehensive view, it often involves sifting through massive amounts of data to find relevant information.

- Dependence on Historical Data: Traditional log analysis relies heavily on historical data, which limits the ability to predict and prevent future problems before they occur.

Custom Log Definitions and Parsing

While customizing log definitions and parsing offers more targeted data, it still has limitations:

- Static Configuration: Custom log parameters must be predefined, which can miss unforeseen or novel events not captured within the existing framework.

- Structural Rigidity: Relying on structured data formats like JSON or XML can restrict the flexibility needed to adapt quickly to new data types or unusual patterns.

Event Correlation and Anomaly Detection

Advanced techniques such as correlation and anomaly detection enhance log management but do not replace the need for real-time monitoring:

- Post-event Analysis: These techniques typically analyze events after they have occurred, which may delay identifying issues that require immediate attention.

- Complex Implementation: Employing sophisticated machine learning algorithms to detect anomalies involves significant setup and maintenance, and it may still be necessary to catch all anomalies in real-time.

Complementing Real-time Alerting: Integrating log management with real-time data monitoring and proactive alerting systems, as discussed in the “Proactive Alerting and Notification” section, ensures a more comprehensive strategy that addresses immediate concerns and long-term operational insights. This combination enhances the overall responsiveness of IT operations, balancing immediate issue detection with deep analytical insights.

Ensuring Scalability and Flexibility

Ensuring scalability and flexibility in your IT infrastructure monitoring strategy is essential to accommodate organizational growth and adapt to evolving technology landscapes. Scalability means the monitoring system can handle increasing workloads—tracking more devices, transactions, or interactions without performance degradation.

Key strategies to achieve scalability and flexibility include:

- Agentless Monitoring Solutions: Utilizing agentless monitoring systems can dramatically simplify scalability. Without installing agents on each monitored device, these systems can scale more efficiently and with less overhead, avoiding the complexities associated with agent-based setups.

- Cloud-based and Browser-based Solutions: Implementing cloud-based and browser-based monitoring tools allows users to access monitoring data from anywhere, facilitating management across distributed environments. These solutions adjust dynamically to workload changes, ensuring robust performance during peak operational periods.

Flexibility is crucial for integrating new technologies and adapting to new business requirements:

- Modular Architecture: Adopt tools that support a modular approach, allowing for the seamless integration of emerging technologies such as IoT devices or blockchain.

- Configuration Management: A robust configuration management strategy ensures that your monitoring system adapts quickly to changes in the IT environment without requiring extensive manual reconfigurations.

Integrating Monitoring with DevOps

Integrating monitoring into DevOps practices significantly enhances operational efficiency and fosters continuous improvement. DevOps’s seamless integration of development and operations processes—focusing on continuous integration (CI), continuous delivery (CD), and rapid feedback—is critical.

Effective integration involves:

- Real-time Monitoring and Feedback: Utilizing real-time, agentless monitoring tools within DevOps workflows provides immediate insights into system performance and health. This real-time data is crucial for DevOps teams to identify and address issues arising during development cycles rapidly.

- Enhanced Collaboration: Browser-based monitoring tools ensure that all team members, regardless of location, can access the same real-time data, improving collaboration and speeding up decision-making processes.

Additional benefits include:

- Automated Operations: Integration of monitoring with automated deployment tools helps manage application deployments more effectively, ensuring that any performance or stability issues can trigger automatic rollback or scaling actions.

- Data-driven Decisions: With comprehensive visibility into application and system performance, teams can make informed decisions that improve operational efficiency and product quality.

Organizations can achieve high operational efficiency and agility by leveraging agentless, scalable monitoring solutions and integrating them deeply into DevOps practices. This approach supports rapid scaling and ensures that IT systems are robust, reliable, and ready to meet future challenges.

Advanced Security Monitoring

Security monitoring plays a pivotal role in safeguarding data and ensuring compliance with regulatory standards within IT infrastructure. Effective security monitoring strategies involve continuously scrutinizing the IT environment to detect, address, and mitigate potential security threats and vulnerabilities. This includes utilizing technologies such as intrusion detection systems (IDS), firewalls, and antivirus software, all integrated into a cohesive monitoring framework that spans the entire network.

A critical component of advanced security monitoring is the implementation of role-based access control systems, particularly those that use a “trusted spaces” model. This model ensures that:

- Role-Based Access: Access to sensitive data and critical system functionalities is strictly controlled based on user roles, significantly enhancing internal security and compliance.

- Segmented Visibility: Users can view and manage only the IT environment segments that pertain to their role, reducing the risk of internal threats and data breaches.

Additionally, deploying Security Information and Event Management (SIEM) systems can aggregate and analyze security data across the organization to identify unusual patterns that might indicate a security incident, enabling quicker and more effective responses. Automated compliance reporting within these systems also aids in meeting regulatory requirements efficiently, providing detailed reports demonstrating adherence to standards like GDPR, HIPAA, or PCI DSS.

Optimizing User Experience Monitoring

User experience monitoring (UEM) is crucial for ensuring applications perform optimally from the user’s perspective, a critical factor in customer satisfaction and business success. UEM involves detailed tracking and analysis of how end-users interact with applications and how these interactions impact their overall experience. Key aspects include:

- Performance Monitoring: Observing page load times, transaction processes, and responsiveness to user inputs.

- Proactive Issue Detection: Utilizing real-time analytics to receive immediate alerts when performance metrics deviate from established baselines, allowing for swift corrective actions.

Implementing UEM tools enables IT teams to pinpoint areas where the user experience may fall short, such as slow response times or frequent transaction errors. For instance, if monitoring data indicates that a checkout process is frequently abandoned, a deeper analysis may reveal that delays at the payment stage are causing user frustration.

To maximize the effectiveness of user experience monitoring, organizations should deploy tools that offer detailed analytics on user behavior and application performance. These tools should be well-integrated with existing IT infrastructure monitoring systems to ensure a holistic view of technical performance and user satisfaction, aligning IT initiatives with user needs and business objectives. This integrated approach helps maintain high standards of user experience and supports proactive improvements and innovations in application development and deployment.

Conclusion

In this guide, we have thoroughly examined the essential components crucial for robust IT infrastructure monitoring. Maintaining infrastructure visibility, proactive alerting and notification, performance metrics and analytics, scalability and flexibility, integration with DevOps practices, security monitoring, and user experience monitoring are imperative to achieve the utmost reliability, efficiency, and security of IT operations. By implementing these elements successfully, organizations can significantly contribute to their overall success and stay ahead of the competition.

Infrared360 by Avada Software excels by addressing these essential components with its unique features:

- Agentless Monitoring: As an agentless monitoring and management solution, Infrared360 ensures seamless scalability and reduces the overhead of deploying agents on each device, making it easier to manage and less intrusive.

- Real-Time Monitoring: Its capabilities allow for immediate detection and alerts on issues, which is crucial for maintaining system uptime and quick responsiveness.

- Role-Based Access Control: Enhances security by ensuring that sensitive data and system functionalities are only accessible to authorized users, aligning with best practices for compliance and internal security.

We encourage you to explore the solution in more detail to truly understand how Infrared360 can elevate your IT monitoring strategy and help you efficiently manage your middleware and other IT components.

Discover how you can leverage Infrared360 to ensure your IT infrastructure is monitored and optimized for future growth and challenges:

- Visit our solution page to learn more about Infrared360.

- Contact our expert team for a personalized consultation.

Equip your business with the tools to maintain high performance and secure operations.